I was frustrated last month after I made a title format change and applied it to all my posts. Previously, I had my post title formatted like:

Post Title – Michael Aulia’s Technology & Reviews Blog

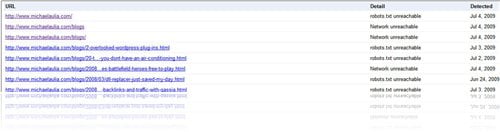

After a few replies on a forum thread, I decided to change the title to just Post Title for SEO (please confirm this? :)). Because of this, I logged on to my Google Webmaster Tools and realized that I’ve got lots of “Network unreachable” or “robots.txt unreachable” errors on my Google Sitemap. My traffic went down for several hundreds of visitors for several weeks this month as a result :(

After several weeks of frustration, I found the problem to these sitemap unreachable errors. Apparently, it’s not caused by changing the posts’ title format. It’s true that Google bots needed to re-index my blog again but they weren’t able to properly.

Oh, believe me, I’ve tried almost everything: rebuilding the sitemap file, trying to move the sitemap file, trying to write a few lines manually of my own, and even believed that maybe my server was down temporarily when Google bots visited my blog.

However, after a week or two, I was still receiving these errors so I realized something must be wrong. I found out a couple of bloggers having the same problem and it was because their web hosts blocked a Google’s bot. When I raised this concern to HostGator, the support guy confirmed that they didn’t block a bot and even gave me the IP addresses of the Google bots on their white lists:

# 216.239.32.0/19 # Googlebot

# 64.233.160.0/19 # Googlebot

# 72.14.192.0/18 # Googlebot

# 209.85.128.0/17 # Googlebot

# 66.102.0.0/20 # Googlebot

# 74.125.0.0/16 # Googlebot

# 66.249.64.0/19 #Googlebot

I believed the guy so I went on googling for hours and days trying to find every possible solution that I can think of. Then I stumbled into a blog post (unfortunately I forgot where it was :( ), saying that if the hosting said that they are not blocking any Google bots, ask again. So I did and then another staff replied apologizing me for what happened because he found a Google bot being blocked by them (it’s not on that list)! He fixed it and Google bots are celebrating and partying on my blog.

Conclusion: The XML sitemap errors (Network Unreachable or Robots.txt unreachable) can be caused by many things, but check that your web host doesn’t block one of Google’s spider bots! (and then after you try a few things, check again!)

Comments are closed.